Deploy Nginx Ingress Controller on Kubernetes. The standard way of exposing applications that are running on a set of Pods in a Kubernetes Cluster is by using service resource. Each Pod in Kubernetes has its own IP address, but a set of Pods can have a single DNS name. Kubernetes is able to load-balance traffic across the Pods without any modification in the application layer. A service, by default is assigned an IP address (sometimes called the “cluster IP“), which is used by the Service proxies. A Service is able to identify a set of Pods using label selectors.

What is an Ingress Controller?

Before you can answer this question, an understanding of Ingress object in Kubernetes is important. From the official Kubernetes documentation, an Ingress is defined like as:

An API object that manages external access to the services in a cluster, typically HTTP.

Ingress may provide load balancing, SSL termination and name-based virtual hosting.An Ingress in Kubernetes exposes HTTP and HTTPS routes from outside the cluster to services running within the cluster. All the traffic routing is controlled by rules defined on the Ingress resource. An Ingress may be configured to:

- Provide Services with externally-reachable URLs

- Load balance traffic coming into cluster services

- Terminate SSL / TLS traffic

- Provide name-based virtual hosting in Kubernetes

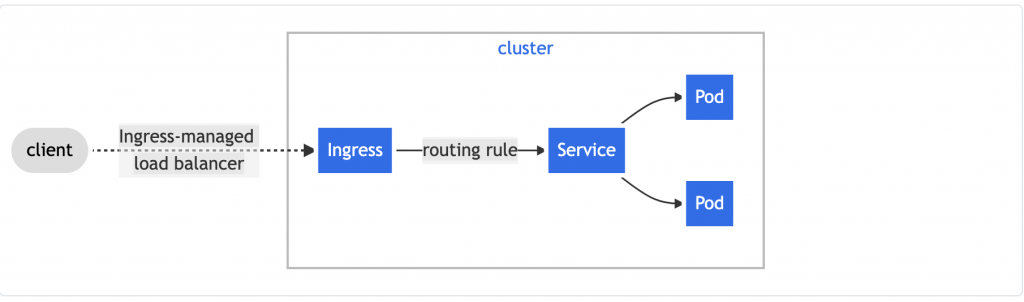

An Ingress controller is what fulfils the Ingress, usually with a load balancer. Below is an example on how an Ingress sends all the client traffic to a Service in Kubernetes Cluster:

For the standard HTTP and HTTPS traffic, an Ingress Controller will be configured to listen on ports 80 and 443. It should bind to an IP address from which the cluster will receive traffic from. A Wildcard DNS record for the domain used for Ingress routes will point to the IP address(s) that Ingress controller listens on.

It is fact that Kubernetes adopts a BYOS (Bring-Your-Own-Software) approach to most of its addons and it doesn’t provide a software that does Ingress functions out of the box. You can choose from the plenty of Ingress Controllers available. Kubedex does a good job as well on summarizing list of Ingresses available for Kubernetes.

With all the basics on Kubernetes Services and Ingress, we can now plunge into the actual installation of NGINX Ingress Controller Kubernetes.

Step 1: Deploy Nginx Ingress Controller in Kubernetes

We shall consider two major deployment options captured in the next sections.

Option 1: Install without Helm

With this method you’ll manually download and run deployment manifests using kubectl command line tool.

Step 1: Install git, curl and wget tools

Install git, curl and wget tools in your Bastion where kubectl is installed and configured:

# CentOS / RHEL / Fedora / Rocky

sudo yum -y install wget curl git

# Debian / Ubuntu

sudo apt update

sudo apt install wget curl gitStep 2: Apply Nginx Ingress Controller manifest

The deployment process varies depending on your Kubernetes setup. My Kubernetes will use Bare-metal Nginx Ingress deployment guide. For other Kubernetes clusters including managed clusters refer to below guides:

- microk8s

- minikube

- AWS

- GCE – GKE

- Azure

- Digital Ocean

- Scaleway

- Exoscale

- Oracle Cloud Infrastructure

- Bare-metal

The Bare-metal method applies to any Kubernetes clusters deployed on bare-metal with generic Linux distribution(Such as CentOS, Ubuntu, Debian, Rocky Linux) e.t.c.

Download Nginx controller deployment for Baremetal:

controller_tag=$(curl -s https://api.github.com/repos/kubernetes/ingress-nginx/releases/latest | grep tag_name | cut -d '"' -f 4)

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/${controller_tag}/deploy/static/provider/baremetal/deploy.yamlRename deployment file:

mv deploy.yaml nginx-ingress-controller-deploy.yamlFeel free to check the file contents and modify where you see fit:

vim nginx-ingress-controller-deploy.yamlApply Nginx ingress controller manifest deployment file:

$ kubectl apply -f nginx-ingress-controller-deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch createdYou can update your current context to use nginx ingress namespace:

kubectl config set-context --current --namespace=ingress-nginxRun the following command to check if the ingress controller pods have started:

$ kubectl get pods -n ingress-nginx -l app.kubernetes.io/name=ingress-nginx --watch

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create--1-hpkzp 0/1 Completed 0 43s

ingress-nginx-admission-patch--1-qnjlj 0/1 Completed 1 43s

ingress-nginx-controller-644555766d-snvqf 1/1 Running 0 Once the ingress controller pods are running, you can cancel the command typing Ctrl+C.

If you want to run multiple Nginx Ingress Pods, you can scale with the command below:

$ kubectl -n ingress-nginx scale deployment ingress-nginx-controller --replicas 2

deployment.apps/ingress-nginx-controller scaled

$ kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-lj278 0/1 Completed 0 18m

ingress-nginx-admission-patch-zsjkp 0/1 Completed 0 18m

ingress-nginx-controller-6dc865cd86-n474n 0/1 ContainerCreating 0 119s

ingress-nginx-controller-6dc865cd86-tmlgf 1/1 Running 0 18mOption 2: Install using Helm

If you opt in for the Helm installation method then follow the steps provided in this section.

Step 1: Install helm 3 in our workstation

Install helm 3 in your Workstation where Kubectl is installed and configured.

cd ~/

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.shThe installer script works for both Linux and macOS operating systems. Here is a successful installation output:

Downloading https://get.helm.sh/helm-v3.10.3-linux-amd64.tar.gz

Verifying checksum... Done.

Preparing to install helm into /usr/local/bin

helm installed into /usr/local/bin/helmLet’s query for helm package version to validate working installation:

$ helm version

version.BuildInfo{Version:"v3.10.3", GitCommit:"835b7334cfe2e5e27870ab3ed4135f136eecc704", GitTreeState:"clean", GoVersion:"go1.18.9"}Step 2: Deploy Nginx Ingress Controller

Download latest stable release of Nginx Ingress Controller code:

controller_tag=$(curl -s https://api.github.com/repos/kubernetes/ingress-nginx/releases/latest | grep tag_name | cut -d '"' -f 4)

wget https://github.com/kubernetes/ingress-nginx/archive/refs/tags/${controller_tag}.tar.gzExtract the file downloaded:

tar xvf ${controller_tag}.tar.gzSwitch to the directory created:

cd ingress-nginx-${controller_tag}Change your working directory to charts folder:

cd charts/ingress-nginx/Create namespace

kubectl create namespace ingress-nginxNow deploy Nginx Ingress Controller using the following commands

helm install -n ingress-nginx ingress-nginx -f values.yaml .Sample deployment output

NAME: ingress-nginx

LAST DEPLOYED: Thu Nov 4 02:50:28 2021

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace ingress-nginx get services -o wide -w ingress-nginx-controller'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

ingressClassName: example-class

rules:

- host: www.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: exampleService

port: 80

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tlsCheck status of all resources in ingress-nginx namespace:

kubectl get all -n ingress-nginxChecking runningPods in the namespace.

$ kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-6f5844d579-hwrqn 1/1 Running 0 To check logs in the Pods use the commands:

$ kubectl -n ingress-nginx logs deploy/ingress-nginx-controller

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v1.0.4

Build: 9b78b6c197b48116243922170875af4aa752ee59

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.19.9

-------------------------------------------------------------------------------

W1104 00:06:59.684972 7 client_config.go:615] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I1104 00:06:59.685080 7 main.go:221] "Creating API client" host="https://10.96.0.1:443"

I1104 00:06:59.694832 7 main.go:265] "Running in Kubernetes cluster" major="1" minor="22" git="v1.22.2" state="clean" commit="8b5a19147530eaac9476b0ab82980b4088bbc1b2" platform="linux/amd64"

I1104 00:06:59.937097 7 main.go:104] "SSL fake certificate created" file="/etc/ingress-controller/ssl/default-fake-certificate.pem"

I1104 00:06:59.956498 7 ssl.go:531] "loading tls certificate" path="/usr/local/certificates/cert" key="/usr/local/certificates/key"

I1104 00:06:59.975510 7 nginx.go:253] "Starting NGINX Ingress controller"

I1104 00:07:00.000753 7 event.go:282] Event(v1.ObjectReference{Kind:"ConfigMap", Namespace:"ingress-nginx", Name:"ingress-nginx-controller", UID:"5aea2f36-fdf2-4f5c-96ff-6a5cbb0b5b82", APIVersion:"v1", ResourceVersion:"13359975", FieldPath:""}): type: 'Normal' reason: 'CREATE' ConfigMap ingress-nginx/ingress-nginx-controller

I1104 00:07:01.177639 7 nginx.go:295] "Starting NGINX process"

I1104 00:07:01.177975 7 leaderelection.go:243] attempting to acquire leader lease ingress-nginx/ingress-controller-leader...

I1104 00:07:01.178194 7 nginx.go:315] "Starting validation webhook" address=":8443" certPath="/usr/local/certificates/cert" keyPath="/usr/local/certificates/key"

I1104 00:07:01.180652 7 controller.go:152] "Configuration changes detected, backend reload required"

I1104 00:07:01.197509 7 leaderelection.go:253] successfully acquired lease ingress-nginx/ingress-controller-leader

I1104 00:07:01.197857 7 status.go:84] "New leader elected" identity="ingress-nginx-controller-6f5844d579-hwrqn"

I1104 00:07:01.249690 7 controller.go:169] "Backend successfully reloaded"

I1104 00:07:01.249751 7 controller.go:180] "Initial sync, sleeping for 1 second"

I1104 00:07:01.249999 7 event.go:282] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-6f5844d579-hwrqn", UID:"d6a2e95f-eaaa-4d6a-85e2-bcd25bf9b9a3", APIVersion:"v1", ResourceVersion:"13364867", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configurationTo follow logs as they stream run:

kubectl -n ingress-nginx logs deploy/ingress-nginx-controller -fUpgrading Helm Release

I’ll set replica count of the controller Pods to 2:

$ vim values.yaml

controller:

replicaCount: 3We can confirm we currently have one Pod:

$ kubectl -n ingress-nginx get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

ingress-nginx-controller 1/1 1 1 43mUpgrade ingress-nginx release by running the following helm commands:

$ helm upgrade -n ingress-nginx ingress-nginx -f values.yaml .

Release "ingress-nginx" has been upgraded. Happy Helming!

NAME: ingress-nginx

LAST DEPLOYED: Thu Nov 4 03:35:41 2021

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 5

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.Check current number of pods after the upgrade:

$ kubectl -n ingress-nginx get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

ingress-nginx-controller 3/3 3 3 45mUninstalling the Chart

To remove nginx ingress controller and all its associated resources that we deployed by Helm execute below command in your terminal.

$ helm -n ingress-nginx uninstall ingress-nginx

release "ingress-nginx" uninstalledStep 2: Configure Nginx Ingress Controller on Kubernetes

With Ingress Controller installed, we need to configure external connectivity method. For this we have two major options.

Option 1: Using Load Balancer (Highly recommended)

Load balancer is used to expose an application running in Kubernetes cluster to the external network. It provides a single IP address to route incoming requests to Ingress controller application. In order to successfully create Kubernetes services of type LoadBalancer, you need to have the load balancer implementation inside / or outside Kubernetes.

When a service is deployed in cloud environment, Load Balancer will be available to your service by default. Ingress service should get the LB IP address automatically. But for Baremetal installations you’ll need to deploy Load Balancer implementation for Kubernetes, we recommend MetalLB, use guide in link below to install it.

1. Setting Nginx Ingress to use MetalLB

Check Nginx Ingress service.

$ kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.108.4.75 <none> 80:30084/TCP,443:30540/TCP 5m55s

ingress-nginx-controller-admission ClusterIP 10.105.200.185 <none> 443/TCP 5m55sIf your Kubernetes cluster is a “real” cluster that supports services of type LoadBalancer, it will have allocated an external IP address or FQDN to the ingress controller.

Use the following command to see that IP address or FQDN:

$ kubectl get service ingress-nginx-controller --namespace=ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.108.4.75 <none> 80:30084/TCP,443:30540/TCP 7m53sPatch ingress-nginx-controller service by setting service type to LoadBalancer.

kubectl -n ingress-nginx patch svc ingress-nginx-controller --type='json' -p '[{"op":"replace","path":"/spec/type","value":"LoadBalancer"}]'Confirm successful patch of the service.

service/ingress-nginx-controller patchedService is assigned an IP address automatically from Address Pool as configured in MetalLB.

$ kubectl get service ingress-nginx-controller --namespace=ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

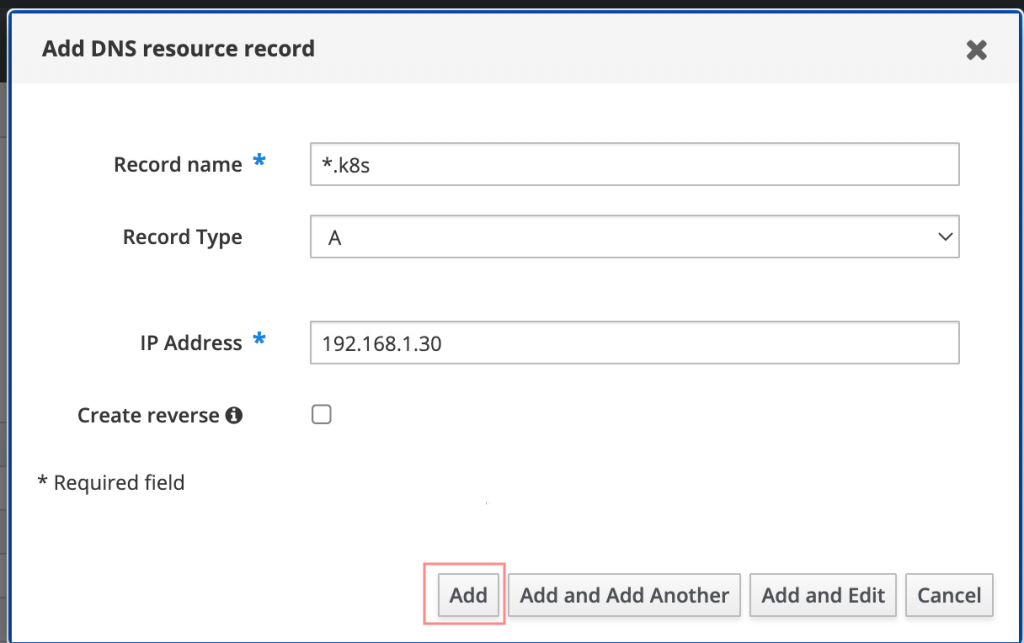

ingress-nginx-controller LoadBalancer 10.108.4.75 192.168.1.30 80:30084/TCP,443:30540/TCP 10m2. Mapping DNS name for Nginx Ingresses to LB IP

We can create domain name, preferably wildcard for use when creating Ingress routes in Kubernetes. In our cluster, we have k8s.example.com as base domain. We’ll use a unique wildcard domain *.k8s.example.com for Ingress.

Option 2: Using Specific Nodes to run Nginx Ingress Pods (NOT recommended)

This is not recommended implementation and exists to serve as reference documentation.

1. Label nodes that will run Ingress Controller Pods

The node selector is used when we have to deploy a pod or group of pods on a specific group of nodes that passed the criteria defined in the configuration file.

List nodes:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster01.example.com Ready control-plane,master 14h v1.23.5

k8smaster02.example.com Ready control-plane,master 14h v1.23.5

k8sworker01.example.com Ready <none> 13h v1.23.5

k8sworker02.example.com Ready <none> 13h v1.23.5

k8sworker03.example.com Ready <none> 13h v1.23.52. Edit ingress-nginx-controller service and set externalIPs

In a private Infrastructure Kubernetes deployment setup it is unlikely that you’ll have Load Balancer service support.

$ kubectl get svc -n ingress-nginx ingress-nginx-controller

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.101.4.21 <none> 80:30248/TCP,443:30773/TCP 3m53sYou’ll notice the service of NodePort type. We’ll update the service by making Ingress bind to specific IP address by using External IPs.

Kubernetes supports assignment of external IP addresses to a Service spec.externalIPs field through the ExternalIP facility. This will expose an additional virtual IP address, assigned to Nginx Ingress Controller Service. This allows us to direct traffic to a local node for load balancing.

In my Kubernetes Cluster I have two control Plane nodes with below primary IP addresses:

- k8smaster01.example.com – 192.168.42.245

- k8smaster02.example.com – 192.168.42.246

IP addresses of the nodes can be checked by running the command:

kubectl get nodes -o wideI’ll create a file containing modification to add External IPs to the service.

$ vim external-ips.yaml

spec:

externalIPs:

- 192.168.42.245

- 192.168.42.246Let’s now apply the patch to the service.

$ kubectl -n ingress-nginx patch svc ingress-nginx-controller --patch "$(cat external-ips.yaml)"

service/ingress-nginx-controller patchedCheck service after applying the patch if External IPs are added:

$ kubectl get svc ingress-nginx-controller -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.101.4.21 192.168.42.245,192.168.42.246 80:30248/TCP,443:30773/TCP 8m313. Running ingress-nginx-controller Pods on Control Plane (Master) Nodes

You can consider running Ingress Controller Pods on the master nodes. To achieve this, we’ll label the master nodes then use node selector to assign pods in Ingress controller deployment to Control Plane nodes.

$ kubectl get nodes -l node-role.kubernetes.io/control-plane

NAME STATUS ROLES AGE VERSION

k8smaster01.example.com Ready control-plane,master 16d v1.23.5

k8smaster02.example.com Ready control-plane,master 16d v1.23.5Add labels runingress=nginx to the master nodes; thing can be any other nodes in the cluster:

kubectl label node k8smaster01.example.com runingress=nginx

kubectl label node k8smaster02.example.com runingress=nginxLabels added to a node can be checked using the command:

kubectl describe node <node-name>Example:

$ kubectl describe node k8smaster01.example.com

Name: k8smaster01.example.com

Roles: control-plane,master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k8smaster01.example.com

kubernetes.io/os=linux

node-role.kubernetes.io/control-plane=

node-role.kubernetes.io/master=

node.kubernetes.io/exclude-from-external-load-balancers=

runingress=nginx # Label added

.....Create patch file to run the Pods in nodes with label runingress=nginx

$ vim node-selector-patch.yaml

spec:

template:

spec:

nodeSelector:

runingress: nginxApply the patch to add node selector:

$ kubectl get deploy -n ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE

ingress-nginx-controller 1/1 1 1 20m

$ kubectl -n ingress-nginx patch deployment/ingress-nginx-controller --patch "$(cat node-selector-patch.yaml)"

deployment.apps/ingress-nginx-controller patched4. Add tolerations to allow Nginx ingress Pods to run in Control Plane nodes

In Kubernetes the default setting is to disable pods from running in the master nodes. To have Ingress pods run in the master nodes, you’ll have to add tolerations.

Let’s create a patch file to apply tolerations on Ingress deployment.

$ vim master-node-tolerations.yaml

spec:

template:

spec:

tolerations:

- key: node-role.kubernetes.io/master

operator: Equal

value: "true"

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Equal

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Equal

value: "true"

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Equal

effect: NoScheduleApply the patch:

kubectl -n ingress-nginx patch deployment/ingress-nginx-controller --patch "$(cat master-node-tolerations.yaml)"Confirm the new pod created has Node Selector configured.

$ kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create--1-hpkzp 0/1 Completed 0 3d

ingress-nginx-admission-patch--1-qnjlj 0/1 Completed 1 3d

ingress-nginx-controller-57b46c846b-8n28t 1/1 Running 0 1m47s

$ kubectl describe pod ingress-nginx-controller-57b46c846b-8n28t

...omitted_output...

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

run-nginx-ingress=true

runingress=nginx

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

.....5. Updating values.yml file to change parameters (only when using Helm deployment)

Edit values file

vim values.yamlAdd tolerations for Master nodes

controller:

tolerations:

- key: node-role.kubernetes.io/master

operator: Equal

value: "true"

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Equal

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Equal

value: "true"

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Equal

effect: NoScheduleSetcontroller.service.externalIPs

controller:

service:

externalIPs: ["192.168.42.245","192.168.42.246"]To set number of replicas of the Ingress controller deployment on controller.replicaCount

controller:

replicaCount: 1If using node selector for pod assignment for the Ingress controller pods set on controller.nodeSelector

controller:

nodeSelector:

kubernetes.io/os: linux

runingress: "nginx"Create namespace

kubectl create namespace ingress-nginxNow deploy Nginx Ingress Controller using the following commands

helm install -n ingress-nginx ingress-nginx -f values.yaml .Step 3: Deploy Services to test Nginx Ingress functionality

Create a temporary namespace called demo

kubectl create namespace demoCreate test Pods and Services YAML file:

cd ~/

vim demo-app.ymlPaste below data into the file:

kind: Pod

apiVersion: v1

metadata:

name: apple-app

labels:

app: apple

spec:

containers:

- name: apple-app

image: hashicorp/http-echo

args:

- "-text=apple"

---

kind: Service

apiVersion: v1

metadata:

name: apple-service

spec:

selector:

app: apple

ports:

- port: 5678 # Default port for image

---

kind: Pod

apiVersion: v1

metadata:

name: banana-app

labels:

app: banana

spec:

containers:

- name: banana-app

image: hashicorp/http-echo

args:

- "-text=banana"

---

kind: Service

apiVersion: v1

metadata:

name: banana-service

spec:

selector:

app: banana

ports:

- port: 5678 # Default port for imageCreate pod and service objects:

$ kubectl apply -f demo-app.yml -n demo

pod/apple-app created

service/apple-service created

pod/banana-app created

service/banana-service createdTest if it is working

$ kubectl get pods -n demo

NAME READY STATUS RESTARTS AGE

apple-app 1/1 Running 0 2m53s

banana-app 1/1 Running 0 2m52s

$ kubectl -n demo logs apple-app

2022/09/03 23:21:19 Server is listening on :5678Create Ubuntu pod that will be used to test service connection.

cat <<EOF | kubectl -n demo apply -f -

apiVersion: v1

kind: Pod

metadata:

name: ubuntu

labels:

app: ubuntu

spec:

containers:

- name: ubuntu

image: ubuntu:latest

command: ["/bin/sleep", "3650d"]

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOFTest services connectivity within the namespace.

$ kubectl -n demo exec -ti ubuntu -- bash

root@ubuntu:/# apt update && apt install curl -y

root@ubuntu:/# curl apple-service:5678

apple

root@ubuntu:/# curl banana-service:5678

bananaCreating an ingress route

The Ingress definition method can also be viewed using explain command option:

$ kubectl explain ingress

KIND: Ingress

VERSION: networking.k8s.io/v1

DESCRIPTION:

Ingress is a collection of rules that allow inbound connections to reach

the endpoints defined by a backend. An Ingress can be configured to give

services externally-reachable urls, load balance traffic, terminate SSL,

offer name based virtual hosting etc.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <Object>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Spec is the desired state of the Ingress. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

status <Object>

Status is the current state of the Ingress. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-statusNow, declare an Ingress to route requests to /apple to the first service, and requests to /banana to second service. Check out the Ingress’ rules field that declares how requests are passed along.

vim webapp-app-ingress.ymlFor Kubernetes cluster version >= 1.19:

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webapp-ingress

spec:

ingressClassName: nginx

rules:

- host: webapp.k8s.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: web-server-service

port:

number: 80Then apply file to create the objects

$ kubectl -n web apply -f webapp-app-ingress.yml

ingress.networking.k8s.io/webapp-ingress createdList configured ingress:

$ kubectl get ingress -n web

NAME CLASS HOSTS ADDRESS PORTS AGE

webapp-ingress nginx webapp.k8s.example.com 80 7sEnter nginx ingress controller to check whether nginx configuration if injected

$ kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-6f5844d579-hwrqn 1/1 Running 0 53m

ingress-nginx-controller-6f5844d579-kvgtd 1/1 Running 0 25m

ingress-nginx-controller-6f5844d579-lcrrt 1/1 Running 0 25m

$ kubectl exec -n ingress-nginx -it ingress-nginx-controller-6f5844d579-hwrqn -- /bin/bash

bash-5.1$ less /etc/nginx/nginx.confTest service using curl.

$ curl http://webapp.k8s.example.com/

<html><body><h1>It works!</h1></body></html>That’s it!