How To Deploy MetalLB Load Balancer on Kubernetes Cluster. When you deploy Kubernetes Cluster in a Public Cloud environment, the network load balancers are available on-demand. The same is not true for clusters deployed in private cloud environment or any kind of on-prem Infrastructure. In this kind of setups it’s the responsibility of System Administrators / Network Engineers to integrate Kubernetes Cluster with any Load balancer(s) in place.

For you to successfully create Kubernetes services of type LoadBalancer, a load balancer implementation available for Kubernetes is required.

Since Bare-metal environments lack Load Balancers by default, Services of type LoadBalancers will remain in the “pending” state indefinitely when created. In this article we’ll perform an installation of MetalLB Load Balancer on Kubernetes Cluster to offer the same kind of access to external consumers. Commonly used tools in Bare-metal Kubernetes clusters to bring user traffic in are “NodePort” and “externalIPs“.

What is MetalLB?

MetalLB is a pure software solution that provides a network load-balancer implementation for Kubernetes clusters that are not deployed in supported cloud provider using standard routing protocols. By installing MetalLB solution, you effectively get LoadBalancer Services within your Kubernetes cluster.

MetalLB have the following requirement to function:

- A Kubernetes cluster on version 1.13.0 or later. The cluster should not have another network load-balancing functionality.

- A cluster network configuration that can coexist with MetalLB.

- Availability of IPv4 addresses that MetalLB will assign to LoadBalancer services when requested.

- If using BGP operating mode, one or more routers capable of speaking BGP are required.

- When using the L2 operating mode, traffic on port 7946 (TCP & UDP, other port can be configured) must be allowed between nodes, as required by memberlist.

Deploy MetalLB Load Balancer on Kubernetes Cluster

Confirm if your Kubernetes Cluster API is responsive and you’re able to use kubectl cluster administration command-line tool:

$ kubectl cluster-info

Kubernetes control plane is running at https://k8sapi.example.com:6443

CoreDNS is running at https://k8sapi.example.com:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxyInstall curl and wget utilities if not available already in your workstation:

### Debian / Ubuntu ###

sudo apt update

sudo apt install wget curl -y

### CentOS / RHEL / Fedora ###

sudo yum -y install wget curlDownload MetalLB installation manifest

Get the latest MetalLB release tag:

MetalLB_RTAG=$(curl -s https://api.github.com/repos/metallb/metallb/releases/latest|grep tag_name|cut -d '"' -f 4|sed 's/v//')To check release tag use echo command:

echo $MetalLB_RTAGCreate directory where manifests will be downloaded to.

mkdir ~/metallb

cd ~/metallbDownload MetalLB installation manifest:

wget https://raw.githubusercontent.com/metallb/metallb/v$MetalLB_RTAG/config/manifests/metallb-native.yamlBelow are the components in the manifest file:

- The

metallb-system/controllerdeployment – Cluster-wide controller that handles IP address assignments. - The

metallb-system/speakerdaemonset – Component that speaks the protocol(s) of your choice to make the services reachable. - Service accounts for both controller and speaker, along with RBAC permissions that the needed by the components to function.

Install MetalLB Load Balancer on Kubernetes cluster

Install MetalLB in your Kubernetes cluster by apply the manifest:

$ kubectl apply -f metallb-native.yaml

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/addresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

secret/webhook-server-cert created

service/webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration createdThe command we executed deploys MetalLB to your Kubernetes cluster, under the metallb-system namespace.

watch kubectl get all -n metallb-system

kubectl get pods -n metallb-system --watchWaif for everything to be in running state, then you can list running Pods:

$ kubectl get pods -n metallb-system

NAME READY STATUS RESTARTS AGE

pod/controller-5bd9496b89-fkgwc 1/1 Running 0 7m25s

pod/speaker-58282 1/1 Running 0 7m25s

pod/speaker-bwzfz 1/1 Running 0 7m25s

pod/speaker-q78r9 1/1 Running 0 7m25s

pod/speaker-vv6nr 1/1 Running 0 7m25sTo list all services instead, use the commands:

$ kubectl get all -n metallb-system

NAME READY STATUS RESTARTS AGE

pod/controller-5bd9496b89-fkgwc 1/1 Running 0 7m25s

pod/speaker-58282 1/1 Running 0 7m25s

pod/speaker-bwzfz 1/1 Running 0 7m25s

pod/speaker-q78r9 1/1 Running 0 7m25s

pod/speaker-vv6nr 1/1 Running 0 7m25s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/webhook-service ClusterIP 10.98.112.134 <none> 443/TCP 7m25s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 4 4 4 4 4 kubernetes.io/os=linux 7m25s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 1/1 1 1 7m25s

NAME DESIRED CURRENT READY AGE

replicaset.apps/controller-5bd9496b89 1 1 1 7m25sThe installation manifest does not include a configuration file required to use MetalLB. All MetalLB components are started, but will remain in idle state until you finish necessary configurations.

Create Load Balancer services Pool of IP Addresses

MetalLB needs a pool of IP addresses to assign to the services when it gets such request. We have to instruct MetalLB to do so via the IPAddressPool CR.

Let’s create a file with configurations for the IPs that MetalLB uses to assign IPs to services. In the configuration the pool has IPs range 192.168.1.30-192.168.1.50.

$ vim ~/metallb/ipaddress_pools.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: production

namespace: metallb-system

spec:

addresses:

- 192.168.1.30-192.168.1.50The IP addresses can be defined by CIDR, by range, and both IPV4 and IPV6 addresses can be assigned.

...

spec:

addresses:

- 192.168.1.0/24You can define multiple instances of IPAddressPoolin single definition. See example below:

...

spec:

addresses:

- 192.168.1.0/24

- 172.20.20.30-172.20.20.50

- fc00:f853:0ccd:e799::/124Announce service IPs after creation

This is a sample configuration used to advertise all IP address pools created in the cluster.

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: l2-advert

namespace: metallb-systemAdvertisement can also be limited to a specific Pool. In the example the limit is to the production pool.

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: pool1-advert

namespace: metallb-system

spec:

ipAddressPools:

- productionA complete configuration with both IP Address Pool and L2 advertisement is shown here.

$ vim ~/metallb/ipaddress_pools.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: production

namespace: metallb-system

spec:

addresses:

- 192.168.1.30-192.168.1.50

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: l2-advert

namespace: metallb-systemApply the configuration using kubectl command:

$ kubectl apply -f ~/metallb/ipaddress_pools.yaml

ipaddresspool.metallb.io/production created

l2advertisement.metallb.io/l2-advert createdList created IP Address Pools and Advertisements:

$ kubectl get ipaddresspools.metallb.io -n metallb-system

NAME AGE

production 23s

$ kubectl get l2advertisements.metallb.io -n metallb-system

NAME AGE

l2-advert 49sGet more details using describe kubectl command option:

$ kubectl describe ipaddresspools.metallb.io production -n metallb-system

Name: production

Namespace: metallb-system

Labels: <none>

Annotations: <none>

API Version: metallb.io/v1beta1

Kind: IPAddressPool

Metadata:

Creation Timestamp: 2022-08-03T22:49:25Z

Generation: 1

Managed Fields:

API Version: metallb.io/v1beta1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:addresses:

f:autoAssign:

f:avoidBuggyIPs:

Manager: kubectl-client-side-apply

Operation: Update

Time: 2022-08-03T22:49:25Z

Resource Version: 26542

UID: 9ef543a2-a96f-4383-893e-2cd0ea1d8cbe

Spec:

Addresses:

192.168.1.30-192.168.1.50

Auto Assign: true

Avoid Buggy I Ps: false

Events: <none>Deploying services that use MetalLB LoadBalancer

With the MetalLB installed and configured, we can test by creating service with spec.type set to LoadBalancer, and MetalLB will do the rest. This exposes a service externally.

MetalLB attaches informational events to the services that it’s controlling. If your LoadBalancer is misbehaving, run kubectl describe service <service name> and check the event log.

We can test with this service:

$ vim web-app-demo.yaml

apiVersion: v1

kind: Namespace

metadata:

name: web

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

namespace: web

spec:

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: httpd

image: httpd:alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: web-server-service

namespace: web

spec:

selector:

app: web

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancerApply configuration manifest:

$ kubectl apply -f web-app-demo.yaml

namespace/web created

deployment.apps/web-server created

service/web-server-service createdLet’s check the IP assigned by Load Balancer to the service:

$ kubectl get svc -n web

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

web-server-service LoadBalancer 10.104.209.218 192.168.1.30 80:30510/TCP 105sTes

$ telnet 192.168.1.30 80

Trying 192.168.1.30...

Connected to 192.168.1.30.

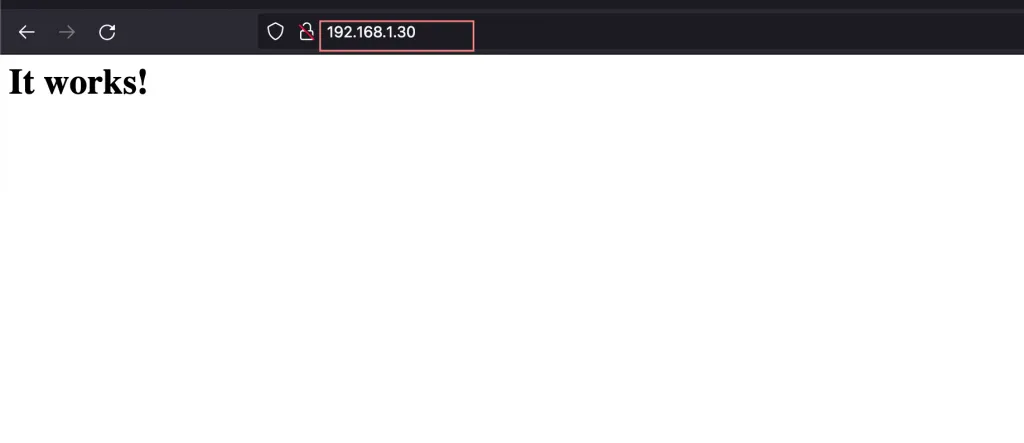

Escape character is '^]'.We can also access the service from a web console via http://192.168.1.30

Requesting specific IP Address for a service

MetalLB respects the spec.loadBalancerIP parameter and automatically assigns next free IP address in the Pool. If you want your service to be set up with a specific address, you can request it by setting that parameter.

Delete the old and apply this manifest to test:

$ kubectl delete -f web-app-demo.yaml

namespace "web" deleted

deployment.apps "web-server" deleted

service "web-server-service" deletedCreating a service with specific IP address.

$ vim web-app-demo.yaml

apiVersion: v1

kind: Namespace

metadata:

name: web

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

namespace: web

spec:

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: httpd

image: httpd:alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: web-server-service

namespace: web

spec:

selector:

app: web

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

loadBalancerIP: 192.168.1.35Apply configuration to create the Pods and Service endpoints.

$ kubectl apply -f web-app-demo.yaml

namespace/web created

deployment.apps/web-server created

service/web-server-service createdChecking IP address assigned:

$ kubectl get svc -n web

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

web-server-service LoadBalancer 10.102.255.187 192.168.1.35 80:32249/TCP 32sTesting the service:

$ curl http://192.168.1.35

<html><body><h1>It works!</h1></body></html>With the IP address confirmed we can then destroy the service:

$ kubectl delete -f web-app-demo.yaml

namespace "web" deleted

deployment.apps "web-server" deleted

service "web-server-service" deletedChoosing specific IP Address pool

When creating a service of type LoadBalancer, you can request a specific address pool. This is a feature supported by MetalLB out of the box.

A specific pool can be requested for IP address assignment by adding the metallb.universe.tf/address-pool annotation to your service, with the name of the address pool as the annotation value. See example:

apiVersion: v1

kind: Service

metadata:

name: web-server-service

namespace: web

annotations:

metallb.universe.tf/address-pool: production

spec:

selector:

app: web

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancerControlling automatic address allocation

This is a reasonable application for smaller pools of “expensive” IPs (e.g. leased public IPv4 addresses). By default, MetalLB allocates free IP addresses from any configured address pool.

This behavior can be prevented by disabling automatic allocation for a pool using autoAssign flag that’s set to false:

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: expensive

namespace: metallb-system

spec:

addresses:

- 42.175.26.64/30

autoAssign: falseAddresses can still be specifically allocated from the “expensive” pool with the methods described in “Choosing specific IP Address pool” section.

IP Address Sharing

In Kubernetes services don’t share IP addresses by default. For any need to colocate services on a single IP, add the metallb.universe.tf/allow-shared-ip annotation to enable selective IP sharing for your services.

The value of this annotation is a “sharing key“. For the Services to share an IP address the following conditions has to be met:

- Both services should share the same sharing key.

- Services should use different ports (e.g. tcp/80 for one and tcp/443 for the other).

- The two services should use the

Clusterexternal traffic policy, or they both point to the exact same set of pods (i.e. the pod selectors are identical).

By using spec.loadBalancerIP, it means the two services share a specific address. See below example configuration of two services that share the same ip address:

apiVersion: v1

kind: Service

metadata:

name: dns-service-tcp

namespace: demo

annotations:

metallb.universe.tf/allow-shared-ip: "key-to-share-192.168.1.36"

spec:

type: LoadBalancer

loadBalancerIP: 192.168.1.36

ports:

- name: dnstcp

protocol: TCP

port: 53

targetPort: 53

selector:

app: dns

---

apiVersion: v1

kind: Service

metadata:

name: dns-service-udp

namespace: demo

annotations:

metallb.universe.tf/allow-shared-ip: "key-to-share-192.168.1.36"

spec:

type: LoadBalancer

loadBalancerIP: 192.168.1.36

ports:

- name: dnsudp

protocol: UDP

port: 53

targetPort: 53

selector:

app: dnsSetting Nginx Ingress to use MetalLB

If you’re using Nginx Ingress Controller in your cluster, you can configure it to use MetalLB as its Load Balancer. Refer to the following guide for steps on the configurations.